Entertainment

OpenAI’s latest creation, “Sora”, Promises to Revolutionise Video Creation

Published

2 months agoon

The world of video creation is about to change forever with the emergence of Sora, an advanced AI model developed by OpenAI. Sora has the capability to generate mind-blowing videos from simple text prompts, opening up a whole new realm of possibilities for content creators, filmmakers, educators, and social media users. By harnessing the power of artificial intelligence, Sora can bring vivid imagination to life on the screen, creating highly detailed scenes, complex camera motion, and multiple characters with vibrant emotions.

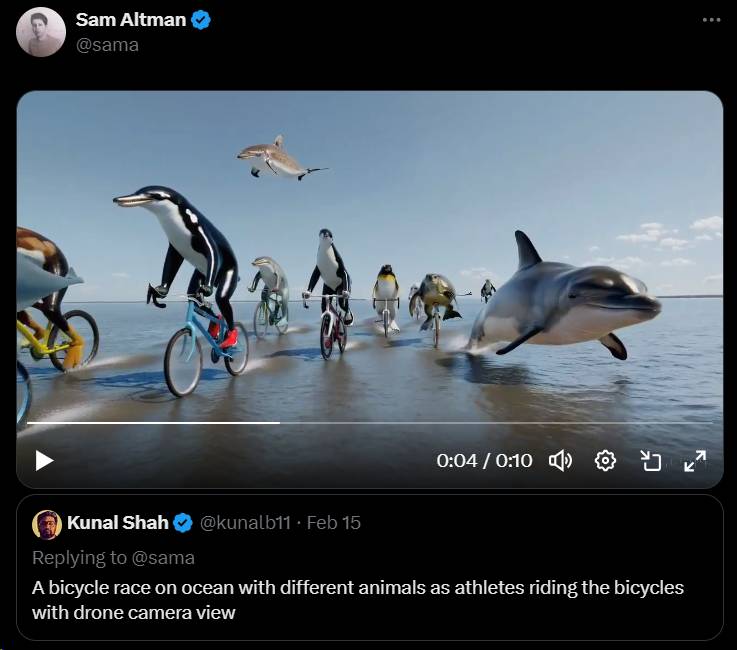

If you can imagine it, Sora can probably bring it to life. Imagine, for instance, a bicycle race on an ocean with different animals as athletes riding the bicycles with a drone camera view, as CRED Founder Kunal Shah did.

What is Sora and How Does It Work?

Sora is an AI model developed by OpenAI that specializes in text-to-video synthesis. This cutting-edge technique involves converting natural language into visual representations, such as images or videos. However, text-to-video synthesis is a highly challenging task for AI models as it requires them to understand the meaning and context of the text, as well as the visual and physical aspects of the video.

To overcome these challenges, Sora is based on a deep neural network, a type of machine learning model that can learn from data and perform complex tasks. Sora has been trained on a vast dataset of videos, covering various topics, styles, and genres. By analyzing the text prompt provided by the user, Sora extracts relevant keywords such as the subject, action, location, time, and mood to generate a video that aligns with the user’s intent. This results in some quite frankly stunning examples of video.

Understanding Sora’s Approach and the Challenges of Text-to-Video Synthesis

Text-to-video synthesis presents several challenges that Sora aims to tackle. One of the primary challenges is the need for the AI model to comprehend the physical world and simulate it in motion. This includes understanding the appearance, movement, interaction, and environmental impacts on objects and characters within the video.

Additionally, Sora needs to consider the stylistic preferences of the user. For example, if the user desires a video with a cinematic style, shot on 35mm film, and with vivid colors, Sora can apply these effects to the video by adjusting the lighting, color, and camera angles.

Sora’s ability to generate realistic and imaginative videos stems from its foundation in a deep neural network. This type of machine learning model allows Sora to learn from a vast dataset of videos, enabling it to understand patterns, styles, and visual elements. By training on diverse videos, Sora gains a comprehensive understanding of the content it generates.

When provided with a text prompt, Sora analyzes the keywords extracted from the text and searches for the most suitable videos from its dataset. It then seamlessly blends these videos together, creating a new video that aligns with the user’s intent. This unique approach allows Sora to generate highly detailed and dynamic videos.

Such as this one of a pair of Labradors podcasting from a sunny mountaintop.

Sora’s ability to analyze text prompts and blend videos together is at the core of its video generation process. By understanding the keywords in the text, such as the subject, action, location, time, and mood, Sora can select the most relevant videos from its dataset. These videos are then seamlessly combined to create a new video that reflects the user’s desired content.

For example, if the text prompt is “A stylish woman walks down a Tokyo street filled with warm glowing neon and animated city signage,” Sora will interpret the prompt, search for videos that match the keywords, and blend them together to generate a video that brings the scene to life.

Such as this video. Feast your eyes.

Sora goes beyond simply generating videos based on text prompts. It also offers customization options to cater to the user’s preferences. By employing a technique called style transfer, Sora can modify the appearance and feel of the video according to the user’s desired style and mood.

For instance, if the user wants a video with a cinematic style, shot on 35mm film, and with vivid colors, Sora can apply these effects to the video, adjusting the lighting, color, and camera angles accordingly. This level of customization allows users to create videos that align with their artistic vision and personal preferences.

The Importance of Sora and its Applications

Sora represents a significant advancement in the field of AI and video generation. Its ability to understand language, visual perception, and physical dynamics showcases the immense potential of AI in creating engaging and immersive content across various domains.

Advancements in AI for Video Generation

Sora’s capabilities highlight the progress made in AI for video generation. By combining natural language processing and deep learning, Sora demonstrates the power of AI in understanding textual prompts and translating them into visually compelling videos. This advancement opens up new avenues for creative storytelling, enabling filmmakers, content creators, and artists to bring their ideas to life more efficiently and effectively.

Creating Engaging and Immersive Content

One of the key benefits of Sora is its ability to create engaging and immersive content. Whether it’s movie trailers, short films, animations, or documentaries, Sora can help filmmakers and storytellers visualize their ideas and concepts. By generating videos that align with the user’s intent, Sora empowers content creators to produce compelling and original videos that captivate audiences.

Moreover, Sora can also assist viewers in discovering new and interesting content based on their preferences and interests. The personalized nature of the videos generated by Sora allows viewers to explore a wide range of content that resonates with their tastes.

Some Applications of Sora

Movie Trailers, Short Films, and Animations

Sora’s ability to generate videos from text prompts makes it an invaluable tool for filmmakers. It can assist in creating captivating movie trailers, short films, and animations by visualizing the director’s vision. Filmmakers can provide Sora with a text prompt summarizing the essence of their story, and Sora will generate a video that captures the essence and entices audiences.

Enhancing Existing Videos with New Elements

Sora’s versatility extends to enhancing existing videos. Video editors and producers can leverage Sora to add special effects, change backgrounds, or insert new characters into their videos. This capability allows for greater creativity and variety in video production, offering endless possibilities for modifying and improving videos.

Generating Educational Videos

Educators and learners can benefit from Sora’s ability to generate educational videos. By providing text summaries of scientific concepts, historical events, or cultural phenomena, Sora can create informative and engaging videos that enhance understanding and retention. Sora’s educational videos can serve as valuable resources for self-paced learning or classroom instruction.

Personalised Videos for Social Media

Sora enables social media users and influencers to create personalized videos that express their personality and emotions. Whether it’s birthday greetings, travel diaries, or memes, Sora can help users create unique and fun videos that resonate with their audience. These personalized videos foster a deeper connection and interaction with friends and followers, based on their preferences and engagement.

Visualising Ideas and Scenarios

Sora’s text-to-video synthesis capabilities extend beyond entertainment and education. Designers and innovators can leverage Sora to visualize their ideas, scenarios, and dreams. Whether it’s designing a product, imagining a future concept, or exploring a fantasy world, Sora can help bring these visions to life. By generating videos based on text descriptions, Sora provides a platform for designers to test their prototypes and visions, gathering feedback and suggestions.

Sora’s Challenges and Limitations

It’s not all sunshine and roses. While Sora represents a remarkable leap forward in AI-driven video generation, it still faces certain challenges and limitations that need to be acknowledged.

Limited Public Availability

Currently, Sora is not publicly available and is accessible only to a small group of researchers and creative professionals for feedback and testing purposes. OpenAI has yet to announce the release date and details regarding the public availability of Sora. The exact pricing structure and licensing model also remain unknown at this time.

Concerns Regarding Content Generation

OpenAI has set certain terms of service for the use of Sora, aimed at preventing the creation of content that involves extreme violence, sexual content, hateful imagery, celebrity likeness, or the intellectual property of others. OpenAI actively monitors the usage of Sora and reserves the right to revoke access or modify the generated output if any violation or abuse is detected.

Given the nature of content generation, there is also a possibility that Sora may generate inaccurate, inappropriate, or harmful content. This includes scenarios where Sora may misrepresent facts, violate privacy, or promote bias. Users must exercise caution and responsibility when utilizing Sora to ensure the content generated aligns with ethical guidelines.

Handling Complex and Ambitious Prompts

Sora’s ability to handle complex or ambiguous prompts is currently limited. Prompts that involve multiple sentences, logical reasoning, or abstract concepts may pose challenges for Sora. Additionally, Sora may face difficulties in generating coherent or consistent videos that require temporal continuity, causal relationships, or a narrative structure. Users should be mindful of these limitations and tailor their prompts accordingly.

Speaking about seeing Sora in action, if you’re curious to learn more about Sora and witness its capabilities firsthand, OpenAI has provided in-depth detail about it, and shown some examples of its output. This comprehensive resource offers insights into Sora’s functionality, applications, and potential to transform text prompts into captivating videos.

Sam Altman, the CEO of OpenAI, announced the release of Sora on Twitter. His tweet includes a video demonstration featuring a dog walking on the moon, generated by Sora. This announcement provides a glimpse into the possibilities offered by Sora and highlights its potential impact on the world of video creation.

Sora has the potential to revolutionise the world of video creation. By leveraging advanced techniques such as text-to-video synthesis and style transfer, Sora can transform simple text prompts into visually stunning and immersive videos. Its applications span across various domains, including filmmaking, education, social media, and design.

But crucially, while Sora represents a significant advancement, it also faces challenges and limitations that need careful consideration. The responsible use of Sora is crucial to ensure the generation of accurate, appropriate, and ethical content. Sora holds the promise of transforming the way we create and experience videos, unlocking new realms of creativity, imagination, and storytelling, or empowering bad actors to explore the worst of humanity. As always, the power and potential to shape our future lies in our hands.